REST APIs

APIs and The layered cake

The quintessential HTTP practical REST API

The History

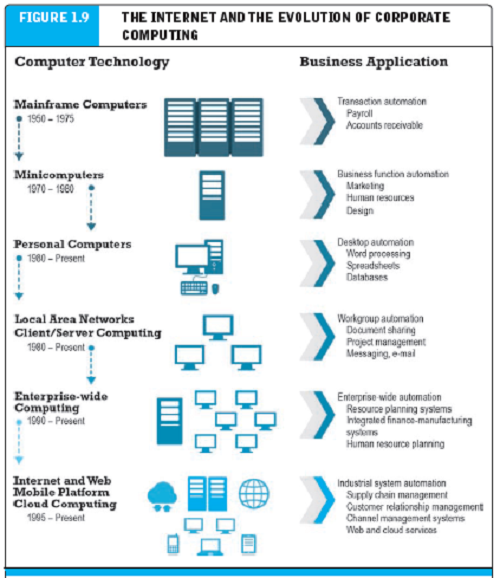

When computers were so expensive that a large company could only afford one, we had systems for maximizing utility: mainframes and 'terminals'

In essence each person was using the same exact machine even if there were many places to access it.

That mainframe was the oracle that had everything, the data, the UX, direct access.

As personal computers began to make things cheaper, people actually networked to the mainframe and SSHed into the mainframe (because we had the paradigm of terminals). This widened the playing field of people who could use computers effectively so we start to abstract away the terminal into the background and give applications on the personal computers that managed the data access from the mainframe.

Switching out the mainframe or databases for a company was a very difficult process in that world. It was needed to keep up, performance wise, and must halt progress and put the company at risk. In the meantime the networks inside of a company (designed to give employees access to the data) started to connect to each other (the internet (between the networks)).

As the vision of what the internet could mean began to take hold we were had to standardize around protocols to allow strangers to work together.

I believe that the concept of internet APIs emerges onto the scene at the height of Object-Oriented Programming's rise to popularity.

APIs are all about the separation of concerns

In good OOP you Code to an interface, not an implementation.

The goal is to reduce "strong coupling" between the parts of your system. The less you need to know in order to use a service the better.

If you code to an interface your team can work in parallel, parts of the system can be completely reworked, you need less meetings, you can service a wider range of clients, code gets cleaner so less bugs.

REST comes from a PhD thesis so it has a more abstract intention than how it was really used. BUT at it's heart it is THE meta interface for data. Since we all know now to code to interfaces REST API came on to the scene as the right mix of a simple, scalable, pleasant, and implementation-agnostic way to get access to your data on the internet.

Engineering your backend with REST APIs

REST is a convention that helps you build a simple interface to your data. When done well most client-side developers will immediately understand how to use your services. So it allows many different apps to be built from the same server and often even community-built services. You can even swap out databases without any client-side code needing to change.

CRUD as your guide

The central four functions of a data-driven app (pretty much all of them) are:

- C-Create

- R-Read

- U-Update

- D-Delete

When planning out a web app you have your skeleton in place once you can do these four things.

REST as CRUD

REST stands for Representational state transfer and there are purists which will be happy to debate you on the RESTfulness of your design. The philosophy behind REST is: Make stateless, simple, uniform client interfaces for server resources.

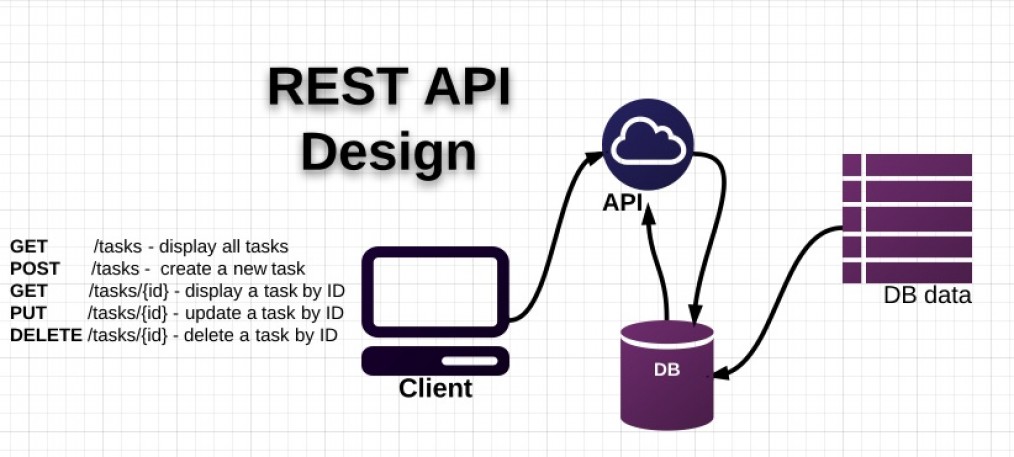

A REST API has two parts to it. One is a way of mapping URLS to your resources. The other is mapping the HTTP methods to your actions.

Mapping URLS to resources

So think about the objects in your app. It might be students and courses or

users and purchases but they typically align with database tables.

I tend to think in terms of collections and identifiers which are nested.

As an example, suppose you have users, each user has a unique username, and a collection of permissions then I might

return those values at the following URLS: (the :attribute notation is a way of indicating a variable)

/userswould represent the collection of users/users/:useridwould represent the single user with user id:userid/users/:userid/permissionswould represent the collection of permissions that the specific user with user id:useridhas./users/:userid/permissions/:permission_codemight be the specific status of that user and that permission

Once you've got a URL which represents a particular collection or object (a resource) then we can map the HTTP verbs to actions on that resource:

- GET == Read

- POST == Create

- PUT == Update

- DELETE == Destroy

Suppose I wanted to keep many users, each with an id. I could imagine the following RESTful interface/contract/agreement:

POST /user

(create a user return their id)

GET /user/:user_id

(get information about user # :user_id )

PUT /user/:user_id

(alter the information about user :user_id)

DELETE /user/:user_id

(destroy user :user_id)

If that is my contract then if my API is hosted at http://myapi.com someone putting the URL

http://myapi.com/user/2 should see data about user number 2.

REST in the wild: Find the Twitter REST API. How is it structured? What sort of resources do they grant access to? How about Reddit, do they have a REST API? What does it show you about their data stores?